feat(web/*): drop most things

Change-Id: I85dde8470a4cf737bc193e0b50d0a4b5ee6d7f56

This commit is contained in:

parent

cff6575948

commit

768f053416

26 changed files with 0 additions and 1940 deletions

|

|

@ -1,153 +0,0 @@

|

|||

# This file defines functions for generating an Atom feed.

|

||||

|

||||

{ depot, lib, pkgs, ... }:

|

||||

|

||||

with depot.nix.yants;

|

||||

|

||||

let

|

||||

inherit (builtins) foldl' map readFile replaceStrings sort;

|

||||

inherit (lib) concatStrings concatStringsSep max removeSuffix;

|

||||

inherit (pkgs) runCommand;

|

||||

|

||||

# 'link' describes a related link to a feed, or feed element.

|

||||

#

|

||||

# https://validator.w3.org/feed/docs/atom.html#link

|

||||

link = struct "link" {

|

||||

rel = string;

|

||||

href = string;

|

||||

};

|

||||

|

||||

# 'entry' describes a feed entry, for example a single post on a

|

||||

# blog. Some optional fields have been omitted.

|

||||

#

|

||||

# https://validator.w3.org/feed/docs/atom.html#requiredEntryElements

|

||||

entry = struct "entry" {

|

||||

# Identifies the entry using a universally unique and permanent URI.

|

||||

id = string;

|

||||

|

||||

# Contains a human readable title for the entry. This value should

|

||||

# not be blank.

|

||||

title = string;

|

||||

|

||||

# Content of the entry.

|

||||

content = option string;

|

||||

|

||||

# Indicates the last time the entry was modified in a significant

|

||||

# way (in seconds since epoch).

|

||||

updated = int;

|

||||

|

||||

# Names authors of the entry. Recommended element.

|

||||

authors = option (list string);

|

||||

|

||||

# Related web pages, such as the web location of a blog post.

|

||||

links = option (list link);

|

||||

|

||||

# Conveys a short summary, abstract, or excerpt of the entry.

|

||||

summary = option string;

|

||||

|

||||

# Contains the time of the initial creation or first availability

|

||||

# of the entry.

|

||||

published = option int;

|

||||

|

||||

# Conveys information about rights, e.g. copyrights, held in and

|

||||

# over the entry.

|

||||

rights = option string;

|

||||

};

|

||||

|

||||

# 'feed' describes the metadata of the Atom feed itself.

|

||||

#

|

||||

# Some optional fields have been omitted.

|

||||

#

|

||||

# https://validator.w3.org/feed/docs/atom.html#requiredFeedElements

|

||||

feed = struct "feed" {

|

||||

# Identifies the feed using a universally unique and permanent URI.

|

||||

id = string;

|

||||

|

||||

# Contains a human readable title for the feed.

|

||||

title = string;

|

||||

|

||||

# Indicates the last time the feed was modified in a significant

|

||||

# way (in seconds since epoch). Will be calculated based on most

|

||||

# recently updated entry if unset.

|

||||

updated = option int;

|

||||

|

||||

# Entries contained within the feed.

|

||||

entries = list entry;

|

||||

|

||||

# Names authors of the feed. Recommended element.

|

||||

authors = option (list string);

|

||||

|

||||

# Related web locations. Recommended element.

|

||||

links = option (list link);

|

||||

|

||||

# Conveys information about rights, e.g. copyrights, held in and

|

||||

# over the feed.

|

||||

rights = option string;

|

||||

|

||||

# Contains a human-readable description or subtitle for the feed.

|

||||

subtitle = option string;

|

||||

};

|

||||

|

||||

# Feed generation functions:

|

||||

|

||||

renderEpoch = epoch: removeSuffix "\n" (readFile (runCommand "date-${toString epoch}" { } ''

|

||||

date --date='@${toString epoch}' --utc --iso-8601='seconds' > $out

|

||||

''));

|

||||

|

||||

escape = replaceStrings [ "<" ">" "&" "'" ] [ "<" ">" "&" "'" ];

|

||||

|

||||

elem = name: content: ''<${name}>${escape content}</${name}>'';

|

||||

|

||||

renderLink = defun [ link string ] (l: ''

|

||||

<link href="${escape l.href}" rel="${escape l.rel}" />

|

||||

'');

|

||||

|

||||

# Technically the author element can also contain 'uri' and 'email'

|

||||

# fields, but they are not used for the purpose of this feed and are

|

||||

# omitted.

|

||||

renderAuthor = author: ''<author><name>${escape author}</name></author>'';

|

||||

|

||||

renderEntry = defun [ entry string ] (e: ''

|

||||

<entry>

|

||||

${elem "title" e.title}

|

||||

${elem "id" e.id}

|

||||

${elem "updated" (renderEpoch e.updated)}

|

||||

${if e ? published

|

||||

then elem "published" (renderEpoch e.published)

|

||||

else ""

|

||||

}

|

||||

${if e ? content

|

||||

then ''<content type="html">${escape e.content}</content>''

|

||||

else ""

|

||||

}

|

||||

${if e ? summary then elem "summary" e.summary else ""}

|

||||

${concatStrings (map renderAuthor (e.authors or []))}

|

||||

${if e ? subtitle then elem "subtitle" e.subtitle else ""}

|

||||

${if e ? rights then elem "rights" e.rights else ""}

|

||||

${concatStrings (map renderLink (e.links or []))}

|

||||

</entry>

|

||||

'');

|

||||

|

||||

mostRecentlyUpdated = defun [ (list entry) int ] (entries:

|

||||

foldl' max 0 (map (e: e.updated) entries)

|

||||

);

|

||||

|

||||

sortEntries = sort (a: b: a.published > b.published);

|

||||

|

||||

renderFeed = defun [ feed string ] (f: ''

|

||||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<feed xmlns="http://www.w3.org/2005/Atom">

|

||||

${elem "id" f.id}

|

||||

${elem "title" f.title}

|

||||

${elem "updated" (renderEpoch (f.updated or (mostRecentlyUpdated f.entries)))}

|

||||

${concatStringsSep "\n" (map renderAuthor (f.authors or []))}

|

||||

${if f ? subtitle then elem "subtitle" f.subtitle else ""}

|

||||

${if f ? rights then elem "rights" f.rights else ""}

|

||||

${concatStrings (map renderLink (f.links or []))}

|

||||

${concatStrings (map renderEntry (sortEntries f.entries))}

|

||||

</feed>

|

||||

'');

|

||||

in

|

||||

{

|

||||

inherit entry feed renderFeed renderEpoch;

|

||||

}

|

||||

|

|

@ -1,70 +0,0 @@

|

|||

# This creates the static files that make up my blog from the Markdown

|

||||

# files in this repository.

|

||||

#

|

||||

# All blog posts are rendered from Markdown by cheddar.

|

||||

{ depot, lib, pkgs, ... }@args:

|

||||

|

||||

with depot.nix.yants;

|

||||

|

||||

let

|

||||

inherit (builtins) readFile;

|

||||

inherit (depot.nix) renderMarkdown;

|

||||

inherit (depot.web) atom-feed;

|

||||

inherit (lib) singleton;

|

||||

|

||||

# Type definition for a single blog post.

|

||||

post = struct "blog-post" {

|

||||

key = string;

|

||||

title = string;

|

||||

date = int;

|

||||

|

||||

# Optional time at which this post was last updated.

|

||||

updated = option int;

|

||||

|

||||

# Path to the Markdown file containing the post content.

|

||||

content = path;

|

||||

|

||||

# Whether dangerous HTML tags should be filtered in this post. Can

|

||||

# be disabled to, for example, embed videos in a post.

|

||||

tagfilter = option bool;

|

||||

|

||||

# Optional name of the author to display.

|

||||

author = option string;

|

||||

|

||||

# Should this post be included in the index? (defaults to true)

|

||||

listed = option bool;

|

||||

|

||||

# Is this a draft? (adds a banner indicating that the link should

|

||||

# not be shared)

|

||||

draft = option bool;

|

||||

|

||||

# Previously each post title had a numeric ID. For these numeric

|

||||

# IDs, redirects are generated so that old URLs stay compatible.

|

||||

oldKey = option string;

|

||||

};

|

||||

|

||||

# Rendering fragments for the HTML version of the blog.

|

||||

fragments = import ./fragments.nix args;

|

||||

|

||||

# Functions for generating feeds for these blogs using //web/atom-feed.

|

||||

toFeedEntry = { baseUrl, ... }: defun [ post atom-feed.entry ] (post: rec {

|

||||

id = "${baseUrl}/${post.key}";

|

||||

title = post.title;

|

||||

content = readFile (renderMarkdown post.content);

|

||||

published = post.date;

|

||||

updated = post.updated or post.date;

|

||||

|

||||

links = singleton {

|

||||

rel = "alternate";

|

||||

href = id;

|

||||

};

|

||||

});

|

||||

in

|

||||

{

|

||||

inherit post toFeedEntry;

|

||||

inherit (fragments) renderPost;

|

||||

|

||||

# Helper function to determine whether a post should be included in

|

||||

# listings (on homepages, feeds, ...)

|

||||

includePost = post: !(fragments.isDraft post) && !(fragments.isUnlisted post);

|

||||

}

|

||||

|

|

@ -1,95 +0,0 @@

|

|||

# This file defines various fragments of the blog, such as the header

|

||||

# and footer, as functions that receive arguments to be templated into

|

||||

# them.

|

||||

#

|

||||

# An entire post is rendered by `renderPost`, which assembles the

|

||||

# fragments together in a runCommand execution.

|

||||

{ depot, lib, pkgs, ... }:

|

||||

|

||||

let

|

||||

inherit (builtins) filter map hasAttr replaceStrings;

|

||||

inherit (pkgs) runCommand writeText;

|

||||

inherit (depot.nix) renderMarkdown;

|

||||

|

||||

# Generate a post list for all listed, non-draft posts.

|

||||

isDraft = post: (hasAttr "draft" post) && post.draft;

|

||||

isUnlisted = post: (hasAttr "listed" post) && !post.listed;

|

||||

|

||||

escape = replaceStrings [ "<" ">" "&" "'" ] [ "<" ">" "&" "'" ];

|

||||

|

||||

header = name: title: staticUrl: ''

|

||||

<!DOCTYPE html>

|

||||

<head>

|

||||

<meta charset="utf-8">

|

||||

<meta name="viewport" content="width=device-width, initial-scale=1">

|

||||

<meta name="description" content="${escape name}">

|

||||

<link rel="stylesheet" type="text/css" href="${staticUrl}/tvl.css" media="all">

|

||||

<link rel="icon" type="image/webp" href="/static/favicon.webp">

|

||||

<link rel="alternate" type="application/atom+xml" title="Atom Feed" href="https://tvl.fyi/feed.atom">

|

||||

<title>${escape name}: ${escape title}</title>

|

||||

</head>

|

||||

<body class="light">

|

||||

<header>

|

||||

<h1><a class="blog-title" href="/">${escape name}</a> </h1>

|

||||

<hr>

|

||||

</header>

|

||||

'';

|

||||

|

||||

fullFooter = content: ''

|

||||

<hr>

|

||||

<footer>

|

||||

${content}

|

||||

</footer>

|

||||

</body>

|

||||

'';

|

||||

|

||||

draftWarning = writeText "draft.html" ''

|

||||

<p class="cheddar-callout cheddar-warning">

|

||||

<b>Note:</b> This post is a <b>draft</b>! Please do not share

|

||||

the link to it without asking first.

|

||||

</p>

|

||||

<hr>

|

||||

'';

|

||||

|

||||

unlistedWarning = writeText "unlisted.html" ''

|

||||

<p class="cheddar-callout cheddar-warning">

|

||||

<b>Note:</b> This post is <b>unlisted</b>! Please do not share

|

||||

the link to it without asking first.

|

||||

</p>

|

||||

<hr>

|

||||

'';

|

||||

|

||||

renderPost = { name, footer, staticUrl ? "https://static.tvl.fyi/${depot.web.static.drvHash}", ... }: post: runCommand "${post.key}.html" { } ''

|

||||

cat ${writeText "header.html" (header name post.title staticUrl)} > $out

|

||||

|

||||

# Write the post title & date

|

||||

echo '<article><h2 class="inline">${escape post.title}</h2>' >> $out

|

||||

echo '<aside class="date">' >> $out

|

||||

date --date="@${toString post.date}" '+%Y-%m-%d' >> $out

|

||||

${

|

||||

if post ? updated

|

||||

then ''date --date="@${toString post.updated}" '+ (updated %Y-%m-%d)' >> $out''

|

||||

else ""

|

||||

}

|

||||

${if post ? author then "echo ' by ${post.author}' >> $out" else ""}

|

||||

echo '</aside>' >> $out

|

||||

|

||||

${

|

||||

# Add a warning to draft/unlisted posts to make it clear that

|

||||

# people should not share the post.

|

||||

|

||||

if (isDraft post) then "cat ${draftWarning} >> $out"

|

||||

else if (isUnlisted post) then "cat ${unlistedWarning} >> $out"

|

||||

else "# Your ads could be here?"

|

||||

}

|

||||

|

||||

# Write the actual post through cheddar's about-filter mechanism

|

||||

cat ${renderMarkdown { path = post.content; tagfilter = post.tagfilter or true; }} >> $out

|

||||

echo '</article>' >> $out

|

||||

|

||||

cat ${writeText "footer.html" (fullFooter footer)} >> $out

|

||||

'';

|

||||

in

|

||||

{

|

||||

inherit isDraft isUnlisted renderPost;

|

||||

}

|

||||

|

|

@ -1,29 +0,0 @@

|

|||

# Expose all static assets as a folder. The derivation contains a

|

||||

# `drvHash` attribute which can be used for cache-busting.

|

||||

{ depot, lib, pkgs, ... }:

|

||||

|

||||

let

|

||||

storeDirLength = with builtins; (stringLength storeDir) + 1;

|

||||

logo = depot.web.tvl.logo;

|

||||

in

|

||||

lib.fix (self: pkgs.runCommand "tvl-static"

|

||||

{

|

||||

passthru = {

|

||||

# Preserving the string context here makes little sense: While we are

|

||||

# referencing this derivation, we are not doing so via the nix store,

|

||||

# so it makes little sense for Nix to police the references.

|

||||

drvHash = builtins.unsafeDiscardStringContext (

|

||||

lib.substring storeDirLength 32 self.drvPath

|

||||

);

|

||||

};

|

||||

} ''

|

||||

mkdir $out

|

||||

cp -r ${./.}/* $out

|

||||

cp ${logo.pastelRainbow} $out/logo-animated.svg

|

||||

cp ${logo.bluePng} $out/logo-blue.png

|

||||

cp ${logo.greenPng} $out/logo-green.png

|

||||

cp ${logo.orangePng} $out/logo-orange.png

|

||||

cp ${logo.purplePng} $out/logo-purple.png

|

||||

cp ${logo.redPng} $out/logo-red.png

|

||||

cp ${logo.yellowPng} $out/logo-yellow.png

|

||||

'')

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 18 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 105 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 72 KiB |

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

1

web/static/terminal.min.css

vendored

1

web/static/terminal.min.css

vendored

File diff suppressed because one or more lines are too long

|

|

@ -1,136 +0,0 @@

|

|||

/* Jetbrains Mono font from https://www.jetbrains.com/lp/mono/

|

||||

licensed under Apache 2.0. Thanks, Jetbrains! */

|

||||

@font-face {

|

||||

font-family: jetbrains-mono;

|

||||

src: url(jetbrains-mono.woff2);

|

||||

}

|

||||

|

||||

@font-face {

|

||||

font-family: jetbrains-mono;

|

||||

font-weight: bold;

|

||||

src: url(jetbrains-mono-bold.woff2);

|

||||

}

|

||||

|

||||

@font-face {

|

||||

font-family: jetbrains-mono;

|

||||

font-style: italic;

|

||||

src: url(jetbrains-mono-italic.woff2);

|

||||

}

|

||||

|

||||

@font-face {

|

||||

font-family: jetbrains-mono;

|

||||

font-weight: bold;

|

||||

font-style: italic;

|

||||

src: url(jetbrains-mono-bold-italic.woff2);

|

||||

}

|

||||

|

||||

/* Generic-purpose styling */

|

||||

|

||||

body {

|

||||

max-width: 800px;

|

||||

margin: 40px auto;

|

||||

line-height: 1.6;

|

||||

font-size: 18px;

|

||||

padding: 0 10px;

|

||||

font-family: jetbrains-mono, monospace;

|

||||

}

|

||||

|

||||

h1, h2, h3 {

|

||||

line-height: 1.2

|

||||

}

|

||||

|

||||

/* Blog Posts */

|

||||

|

||||

article {

|

||||

line-height: 1.5em;

|

||||

}

|

||||

|

||||

/* spacing between the paragraphs in blog posts */

|

||||

article p {

|

||||

margin: 1.4em auto;

|

||||

}

|

||||

|

||||

/* Blog styling */

|

||||

|

||||

.light {

|

||||

color: #383838;

|

||||

}

|

||||

|

||||

.blog-title {

|

||||

color: inherit;

|

||||

text-decoration: none;

|

||||

}

|

||||

|

||||

.footer {

|

||||

text-align: right;

|

||||

}

|

||||

|

||||

.date {

|

||||

text-align: right;

|

||||

font-style: italic;

|

||||

float: right;

|

||||

}

|

||||

|

||||

.inline {

|

||||

display: inline;

|

||||

}

|

||||

|

||||

.lod {

|

||||

text-align: center;

|

||||

}

|

||||

|

||||

.uncoloured-link {

|

||||

color: inherit;

|

||||

}

|

||||

|

||||

pre {

|

||||

width: 100%;

|

||||

overflow: auto;

|

||||

}

|

||||

|

||||

code {

|

||||

background: aliceblue;

|

||||

}

|

||||

|

||||

img {

|

||||

max-width: 100%;

|

||||

}

|

||||

|

||||

.cheddar-callout {

|

||||

display: block;

|

||||

padding: 10px;

|

||||

}

|

||||

|

||||

.cheddar-question {

|

||||

color: #3367d6;

|

||||

background-color: #e8f0fe;

|

||||

}

|

||||

|

||||

.cheddar-todo {

|

||||

color: #616161;

|

||||

background-color: #eeeeee;

|

||||

}

|

||||

|

||||

.cheddar-tip {

|

||||

color: #00796b;

|

||||

background-color: #e0f2f1;

|

||||

}

|

||||

|

||||

.cheddar-warning {

|

||||

color: #a52714;

|

||||

background-color: #fbe9e7;

|

||||

}

|

||||

|

||||

kbd {

|

||||

background-color: #eee;

|

||||

border-radius: 3px;

|

||||

border: 1px solid #b4b4b4;

|

||||

box-shadow: 0 1px 1px rgba(0, 0, 0, .2), 0 2px 0 0 rgba(255, 255, 255, .7) inset;

|

||||

color: #333;

|

||||

display: inline-block;

|

||||

font-size: .85em;

|

||||

font-weight: 700;

|

||||

line-height: 1;

|

||||

padding: 2px 4px;

|

||||

white-space: nowrap;

|

||||

}

|

||||

|

|

@ -1,3 +0,0 @@

|

|||

set noparent

|

||||

|

||||

tazjin

|

||||

|

|

@ -1,333 +0,0 @@

|

|||

We've now been working on our rewrite of Nix, [Tvix][], for a little more than

|

||||

two years.

|

||||

|

||||

Our last written update was in September 2023, and although we did publish a

|

||||

couple of things in the meantime (flokli's talk on Tvix at [NixCon

|

||||

2023][nixcon2023], our interview at the [Nix Developer

|

||||

Dialogues][nix-dev-dialogues-tvix], or tazjin's [talk on

|

||||

tvix-eval][tvix-eval-ru] (in Russian)), we never found the time to write

|

||||

something down.

|

||||

|

||||

In the meantime a lot of stuff has happened though, so it's time to change that

|

||||

:-)

|

||||

|

||||

Note: This blog post is intended for a technical audience that is already

|

||||

intimately familiar with Nix, and knows what things like derivations or store

|

||||

paths are. If you're new to Nix, this will not make a lot of sense to you!

|

||||

|

||||

## Evaluation regression testing

|

||||

|

||||

Most of the evaluator work has been driven by evaluating `nixpkgs`, and ensuring

|

||||

that we produce the same derivations, and that their build results end up in the

|

||||

same store paths.

|

||||

|

||||

Builds are not hooked up all the way to the evaluator yet, but for Nix code

|

||||

without IFD (such as `nixpkgs`!) we can verify this property without building.

|

||||

An evaluated Nix derivation's `outPath` (and `drvPath`) can be compared with

|

||||

what C++ Nix produces for the same code, to determine whether we evaluated the

|

||||

package (and all of its dependencies!) correctly [^1].

|

||||

|

||||

We added integration tests in CI that ensure that the paths we calculate match

|

||||

C++ Nix, and are successfully evaluating fairly complicated expressions in them.

|

||||

For example, we test against the Firefox derivation, which exercises some of the

|

||||

more hairy bits in `nixpkgs` (like WASM cross-compilation infrastructure). Yay!

|

||||

|

||||

Although we're avoiding fine-grained optimization until we're sure Tvix

|

||||

evaluates all of `nixpkgs` correctly, we still want to have an idea about

|

||||

evaluation performance and how our work affects it over time.

|

||||

|

||||

For this we extended our benchmark suite and integrated it with

|

||||

[Windtunnel][windtunnel], which now regularly runs benchmarks and provides a

|

||||

view into how the timings change from commit to commit.

|

||||

|

||||

In the future, we plan to run this as a part of code review, before changes are

|

||||

applied to our canonical branch, to provide this as an additional signal to

|

||||

authors and reviewers without having to run the benchmarks manually.

|

||||

|

||||

## ATerms, output path calculation, and `builtins.derivation`

|

||||

|

||||

We've implemented all of these features, which comprise the components needed to

|

||||

construct derivations in the Nix language, and to allow us to perform the path

|

||||

comparisons we mentioned before.

|

||||

|

||||

As an interesting side note, in C++ Nix `builtins.derivation` is not actually a

|

||||

builtin! It is a piece of [bundled Nix code][nixcpp-builtins-derivation], that

|

||||

massages some parameters and then calls the *actual* builtin:

|

||||

`derivationStrict`. We've decided to keep this setup, and implemented support in

|

||||

Tvix to have builtins defined in `.nix` source code.

|

||||

|

||||

These builtins return attribute sets with the previously mentioned `outPath` and

|

||||

`drvPath` fields. Implementing them correctly meant that we needed to implement

|

||||

output path calculation *exactly* the same way as Nix does (bit-by-bit).

|

||||

|

||||

Very little of how this output path calculation works is documented anywhere in

|

||||

C++ Nix. It uses a subset of [ATerm][aterm] internally, produces "fingerprints"

|

||||

containing hashes of these ATerms, which are then hashed again. The intermediate

|

||||

hashes are not printed out anywhere (except if you [patch

|

||||

Nix][nixcpp-patch-hashes] to do so).

|

||||

|

||||

We already did parts of this correctly while starting this work on

|

||||

[go-nix][go-nix-outpath] some while ago, but found some more edge cases and

|

||||

ultimately came up with a nicer interface for Tvix.

|

||||

|

||||

All the Derivation internal data model, ATerm serialization and output path

|

||||

calculation have been sliced out into a more general-purpose

|

||||

[nix-compat][nix-compat-derivation] crate, alongside with more documentation

|

||||

unit tests and a Derivation ATerm parser, so hopefully this will now be more

|

||||

accessible for everyone now.

|

||||

|

||||

Note our builtin does *not* yet persist the Derivation anywhere "on

|

||||

disk" (though we have a debug CL that does write it to a temporary directory,

|

||||

in case we want to track down differences).

|

||||

|

||||

## `tvix-[ca]store`

|

||||

Tvix now has a store implementation!

|

||||

|

||||

### The Nix model

|

||||

Inside Nix, store path contents are normally hashed and communicated in NAR

|

||||

format, which is very coarse and often wasteful - a single bit of change in one

|

||||

file in a large store path causes a new NAR file to be uploaded to the binary

|

||||

cache, which then needs to be downloaded.

|

||||

|

||||

Additionally, identifying everything by the SHA256 digest of its NAR

|

||||

representation makes Nix store paths very incompatible with other

|

||||

content-addressed systems, as it's a very Nix-specific format.

|

||||

|

||||

### The more granular Tvix model

|

||||

After experimenting with some concepts and ideas in Golang, mostly around how to

|

||||

improve binary cache performance[^3], both on-disk as well as over the network,

|

||||

we settled on a more granular, content-addressed and general-purpose format.

|

||||

|

||||

Internally, it behaves very similar to how git handles tree objects, except

|

||||

blobs are identified by their raw BLAKE3 digests rather than some custom

|

||||

encoding, and similarly, tree/directory objects use the BLAKE3 digest of its

|

||||

canonical protobuf serialization as identifiers.

|

||||

|

||||

This provides some immediate benefits:

|

||||

- We only need to keep the same data once, even if it's used across different

|

||||

store paths.

|

||||

- Transfers can be more granular and only need to fetch the data that's

|

||||

needed. Due to everything being content-addressed, it can be fetched from

|

||||

anything supporting BLAKE3 digests, immediately making it compatible with

|

||||

other P2P systems (IPFS blake3 blobs, …), or general-purpose

|

||||

content-addressed caches ([bazel-remote]).

|

||||

|

||||

There's a lot more details about the data model, certain decisions etc. in

|

||||

[the docs][castore-docs].

|

||||

|

||||

### Compatibility

|

||||

We however still want to stay compatible with Nix, as in calculating

|

||||

"NAR-addressed" store paths the same, support substituting from regular Nix

|

||||

binary caches, as well as storing all the other additional metadata about store

|

||||

paths.

|

||||

|

||||

We accomplished this by splitting the two different concerns into two separate

|

||||

`tvix-store` and `tvix-castore` crates, with the former one holding all

|

||||

Nix-specific metadata and functionality, and the latter being a general-purpose

|

||||

content-addressed blob and filesystem tree storage system, which is usable in a

|

||||

lot of contexts outside of Tvix too. For example, if you want to use

|

||||

tvix-castore to write your own git alternative, or provide granular and

|

||||

authenticated access into large scientific datasets, you could!

|

||||

|

||||

### Backends

|

||||

In addition to a gRPC API and client bindings, there's support for local

|

||||

filesystem-based backends, as well as for sled, an embedded K/V database.

|

||||

|

||||

We're also currently working on a backend supporting most common object

|

||||

storages, as well as on more granular seeking and content-defined chunking for

|

||||

blobs.

|

||||

|

||||

### FUSE/virtiofs

|

||||

A tvix-store can be mounted via FUSE, or exposed through virtiofs[^4].

|

||||

While doing the obvious thing - allowing mounting and browsing the contents

|

||||

of the store, this will allow lazy substitution of builds on remote builders, be

|

||||

in containerized or virtualized workloads.

|

||||

|

||||

We have an [example][tvix-boot-readme] in the repository seeding gnu hello into

|

||||

a throwaway store, then booting a MicroVM and executing it.

|

||||

|

||||

### nar-bridge, bridging binary caches

|

||||

`nar-bridge` and the `NixHTTPPathInfoService` bridge `tvix-[ca]store` with

|

||||

existing Nix binary caches and Nix.

|

||||

|

||||

The former exposes a `tvix-[ca]store` over the common Nix HTTP Binary Cache

|

||||

interface (both read and write).

|

||||

|

||||

The latter allows Tvix to substitute from regular Nix HTTP Binary caches,

|

||||

unpacking NARs and ingesting them on-the-fly into the castore model.

|

||||

The necessary parsers for NARInfo, signatures etc are also available in the

|

||||

[nix-compat crate][nix-compat-narinfo].

|

||||

|

||||

## EvalIO / builtins interacting with the store more closely

|

||||

tvix-eval itself is designed to be quite pure when it comes to IO - it doesn't

|

||||

do any IO directly on its own, but for the very little IO functionality it

|

||||

does as part of "basic interaction with paths" (like importing other

|

||||

`.nix` files), it goes through an `EvalIO` interface, which is provided to the

|

||||

Evaluator struct on instantiation.

|

||||

|

||||

This allows us to be a bit more flexible with how IO looks like in practice,

|

||||

which becomes interesting for specific store implementations that might not

|

||||

expose a POSIX filesystem directly, or targets where we don't have a filesystem

|

||||

at all (like WASM).

|

||||

|

||||

Using the `EvalIO` trait also lets `tvix-eval` avoid becoming too strongly

|

||||

coupled to a specific store implementation, hashing scheme etc[^2]. As we can

|

||||

extend the set of builtins available to the evaluator with "foreign builtins",

|

||||

these can live in other crates.

|

||||

|

||||

Following this pattern, we started implementing some of the "basic" builtins

|

||||

that deal with path access in `tvix-eval`, like:

|

||||

|

||||

- `builtins.pathExists`

|

||||

- `builtins.readFile`

|

||||

|

||||

We also recently started working on more complicated builtins like

|

||||

`builtins.filterSource` and `builtins.path`, which are also used in `nixpkgs`.

|

||||

|

||||

Both import a path into the store, and allow passing a Nix expression that's

|

||||

used as a filter function for each path. `builtins.path` can also ensuring the

|

||||

imported contents match a certain hash.

|

||||

|

||||

This required the builtin to interact with the store and evaluator in a very

|

||||

tight fashion, as the filter function (written in Nix) needs to be repeatedly

|

||||

executed for each path, and its return value is able to cause the store to skip

|

||||

over certain paths (which it previously couldn't).

|

||||

|

||||

Getting the abstractions right there required some back-and-forth, but the

|

||||

remaining changes should land quite soon.

|

||||

|

||||

## Catchables / tryEval

|

||||

|

||||

Nix has a limited exception system for dealing with user-generated errors:

|

||||

`builtins.tryEval` can be used to detect if an expression fails (if

|

||||

`builtins.throw` or `assert` are used to generate it). This feature requires

|

||||

extra support in any Nix implementation, as errors may not necessarily cause the

|

||||

Nix program to abort.

|

||||

|

||||

The C++ Nix implementation reuses the C++ language-provided Exception system for

|

||||

`builtins.tryEval` which Tvix can't (even if Rust had an equivalent system):

|

||||

|

||||

In C++ Nix the runtime representation of the program in execution corresponds

|

||||

to the Nix expression tree of the relevant source files. This means that an

|

||||

exception raised in C++ code will automatically bubble up correctly since the

|

||||

C++ and Nix call stacks are equivalent to each other.

|

||||

|

||||

Tvix compiles the Nix expressions to a byte code program which may be mutated by

|

||||

extra optimization rules (for example, we hope to eliminate as many thunks as

|

||||

possible in the future). This means that such a correspondence between the state

|

||||

of the runtime and the original Nix code is not guaranteed.

|

||||

|

||||

Previously, `builtins.tryEval` (which is implemented in Rust and can access VM

|

||||

internals) just allowed the VM to recover from certain kinds of errors. This

|

||||

proved to be insufficient as it [blew up as soon as a `builtins.tryEval`-ed

|

||||

thunk is forced again][tryeval-infrec] – extra bookkeeping was needed. As a

|

||||

solution, we now store recoverable errors as a separate runtime value type.

|

||||

|

||||

As you can imagine, storing evaluation failures as "normal" values quickly leads

|

||||

to all sorts of bugs because most VM/builtins code is written with only ordinary

|

||||

values like attribute sets, strings etc. in mind.

|

||||

|

||||

While ironing those out, we made sure to supplement those fixes with as many

|

||||

test cases for `builtins.tryEval` as possible. This will hopefully prevent any

|

||||

regressions if or rather when we touch this system again. We already have some

|

||||

ideas for replacing the `Catchable` value type with a cleaner representation,

|

||||

but first we want to pin down all the unspoken behaviour.

|

||||

|

||||

## String contexts

|

||||

|

||||

For a long time, we had the [working theory][refscan-string-contexts] that we

|

||||

could get away with not implementing string contexts, and instead do reference

|

||||

scanning on a set of "known paths" (and not implement

|

||||

`builtins.unsafeDiscardStringContext`).

|

||||

|

||||

Unfortunately, we discovered that while this is *conceptually* true, due to a

|

||||

[bug in Nix][string-contexts-nix-bug] that's worked around in the

|

||||

`stdenv.mkDerivation` implementation, we can't currently do this and calculate

|

||||

the same hashes.

|

||||

|

||||

Because hash compatibility is important for us at this point, we bit the bullet

|

||||

and added support for string contexts into our `NixString` implementation,

|

||||

implemented the context-related builtins, and added more unit tests that verify

|

||||

string context behaviour of various builtins.

|

||||

|

||||

## Strings as byte strings

|

||||

|

||||

C++ Nix uses C-style zero-terminated strings internally - however, until

|

||||

recently, Tvix has used standard Rust strings for string values. Since those are

|

||||

required to be valid UTF-8, we haven't been able to properly represent all the

|

||||

string values that Nix supports.

|

||||

|

||||

We recently converted our internal representation to byte strings, which allows

|

||||

us to treat a `Vec<u8>` as a "string-like" value.

|

||||

|

||||

## JSON/TOML/XML

|

||||

|

||||

We added support for the `toJSON`, `toXML`, `fromJSON` and `fromTOML` builtins.

|

||||

|

||||

`toXML` is particularly exciting, as it's the only format that allows expressing

|

||||

(partially applied) functions. It's also used in some of Nix' own test suite, so

|

||||

we can now include these in our unit test suite (and pass, yay!).

|

||||

|

||||

## Builder protocol, drv->builder

|

||||

|

||||

We've been working on the builder protocol, and Tvix's internal build

|

||||

representation.

|

||||

|

||||

Nix uses derivations (encoded in ATerm) as nodes in its build graph, but it

|

||||

refers to other store paths used in that build by these store paths *only*. As

|

||||

mentioned before, store paths only address the inputs - and not the content.

|

||||

|

||||

This poses a big problem in Nix as soon as builds are scheduled on remote

|

||||

builders: There is no guarantee that files at the same store path on the remote

|

||||

builder actually have the same contents as on the machine orchestrating the

|

||||

build. If a package is not binary reproducible, this can lead to so-called

|

||||

[frankenbuilds][frankenbuild].

|

||||

|

||||

This also introduces a dependency on the state that's present on the remote

|

||||

builder machine: Whatever is in its store and matches the paths will be used,

|

||||

even if it was maliciously placed there.

|

||||

|

||||

To eliminate this hermiticity problem and increase the integrity of builds,

|

||||

we've decided to use content-addressing in the builder protocol.

|

||||

|

||||

We're currently hacking on this at [Thaigersprint](https://thaigersprint.org/)

|

||||

and might have some more news to share soon!

|

||||

|

||||

--------------

|

||||

|

||||

That's it for now, try out Tvix and hit us up on IRC or on our mailing list if

|

||||

you run into any snags, or have any questions.

|

||||

|

||||

เจอกันนะ :)

|

||||

|

||||

[^1]: We know that we calculated all dependencies correctly because of how their

|

||||

hashes are included in the hashes of their dependents, and so on. More on

|

||||

path calculation and input-addressed paths in the next section!

|

||||

[^2]: That's the same reason why `builtins.derivation[Strict]` also lives in

|

||||

`tvix-glue`, not in `tvix-eval`.

|

||||

[^3]: See [nix-casync](https://discourse.nixos.org/t/nix-casync-a-more-efficient-way-to-store-and-substitute-nix-store-paths/16539)

|

||||

for one example - investing content-defined chunking (while still keeping

|

||||

the NAR format)

|

||||

[^4]: Strictly speaking, not limited to tvix-store - literally anything

|

||||

providing a listing into tvix-castore nodes.

|

||||

|

||||

[Tvix]: https://tvix.dev

|

||||

[aterm]: http://program-transformation.org/Tools/ATermFormat.html

|

||||

[bazel-remote]: https://github.com/buchgr/bazel-remote/pull/715

|

||||

[castore-docs]: https://code.tvl.fyi/tree/tvix/docs/src/castore

|

||||

[frankenbuild]: https://blog.layus.be/posts/2021-06-25-frankenbuilds.html

|

||||

[go-nix-outpath]: https://github.com/nix-community/go-nix/blob/93cb24a868562714f1691840e94d54ef57bc0a5a/pkg/derivation/hashes.go#L52

|

||||

[nix-compat-derivation]: https://docs.tvix.dev/rust/nix_compat/derivation/struct.Derivation.html

|

||||

[nix-compat-narinfo]: https://docs.tvix.dev/rust/nix_compat/narinfo/index.html

|

||||

[nix-dev-dialogues-tvix]: https://www.youtube.com/watch?v=ZYG3T4l8RU8

|

||||

[nixcon2023]: https://www.youtube.com/watch?v=j67prAPYScY

|

||||

[tvix-eval-ru]: https://tazj.in/blog/tvix-eval-talk-2023

|

||||

[nixcpp-builtins-derivation]: https://github.com/NixOS/nix/blob/49cf090cb2f51d6935756a6cf94d568cab063f81/src/libexpr/primops/derivation.nix#L4

|

||||

[nixcpp-patch-hashes]: https://github.com/adisbladis/nix/tree/hash-tracing

|

||||

[refscan-string-contexts]: https://inbox.tvl.su/depot/20230316120039.j4fkp3puzrtbjcpi@tp/T/#t

|

||||

[store-docs]: https://code.tvl.fyi/about/tvix/docs/src/store/api.md

|

||||

[string-contexts-nix-bug]: https://github.com/NixOS/nix/issues/4629

|

||||

[tryeval-infrec]: https://b.tvl.fyi/issues/281

|

||||

[tvix-boot-readme]: https://code.tvl.fyi/about/tvix/boot/README.md

|

||||

[why-string-contexts-now]: https://cl.tvl.fyi/c/depot/+/10446/7/tvix/eval/docs/build-references.md

|

||||

[windtunnel]: https://staging.windtunnel.ci/tvl/tvix

|

||||

|

|

@ -1,266 +0,0 @@

|

|||

It's already been around half a year since

|

||||

[the last Tvix update][2024-02-tvix-update], so time for another one!

|

||||

|

||||

Note: This blog post is intended for a technical audience that is already

|

||||

intimately familiar with Nix, and knows what things like derivations or store

|

||||

paths are. If you're new to Nix, this will not make a lot of sense to you!

|

||||

|

||||

## Builds

|

||||

A long-term goal is obviously to be able to use the expressions in nixpkgs to

|

||||

build things with Tvix. We made progress on many places towards that goal:

|

||||

|

||||

### Drive builds on IO

|

||||

As already explained in our [first blog post][blog-rewriting-nix], in Tvix, we

|

||||

want to make IFD a first-class citizen without significant perf cost.

|

||||

|

||||

Nix tries hard to split Evaluation and Building into two phases, visible in

|

||||

the `nix-instantiate` command which produces `.drv` files in `/nix/store` and

|

||||

the `nix-build` command which can be invoked on such `.drv` files without

|

||||

evaluation.

|

||||

Scheduling (like in Hydra) usually happens by walking the graph of `.drv` files

|

||||

produced in the first phase.

|

||||

|

||||

As soon as there's some IFD along the path, everything until then gets built in

|

||||

the Evaluator (which is why IFD is prohibited in nixpkgs).

|

||||

|

||||

Tvix does not have two separate "phases" in a build, only a graph of unfinished

|

||||

Derivations/Builds and their associated store paths. This graph does not need

|

||||

to be written to disk, and can grow during runtime, as new Derivations with new

|

||||

output paths are discovered.

|

||||

|

||||

Build scheduling happens continuously with that graph, for everything that's

|

||||

really needed, when it's needed.

|

||||

|

||||

We do this by only "forcing" the realization of a specific store path if the

|

||||

user ultimately wants that specific result to be available on their system, and

|

||||

transitively, if something else wants it. This includes IFD in a very elegant

|

||||

way.

|

||||

|

||||

We want to play with this approach as we continue on bringing our build

|

||||

infrastructure up.

|

||||

|

||||

### Fetchers

|

||||

There's a few Nix builtins that allow describing a fetch (be it download of a

|

||||

file from the internet, clone of a git repo). These needed to be implemented

|

||||

for completeness. We implemented pretty much all downloads of Tarballs, NARs and

|

||||

plain files, except git repositories, which are left for later.

|

||||

|

||||

Instead of doing these fetches immediately, we added a generic `Fetch` type

|

||||

that allows describing such fetches *before actually doing them*, similar to

|

||||

being able to describe builds, and use the same "Drive builds on IO" machinery

|

||||

to delay these fetches to the point where it's needed. We also show progress

|

||||

bars when doing fetches.

|

||||

|

||||

Very early, during bootstrapping, nixpkgs relies on some `builtin:fetchurl`

|

||||

"fake" Derivation, which has some special handling logic in Nix. We implemented

|

||||

these quirks, by converting it to instances of our `Fetch` type and dealing with

|

||||

it there in a consistent fashion.

|

||||

|

||||

### More fixes, Refscan

|

||||

With the above work done, and after fixing some small bugs [^3], we were already

|

||||

able to build some first few store paths with Tvix and our `runc`-based builder

|

||||

🎉!

|

||||

|

||||

We didn't get too far though, as we still need to implement reference scanning,

|

||||

so that's next on our TODO list for here. Stay tuned for further updates there!

|

||||

|

||||

## Eval correctness & Performance

|

||||

As already written in the previous update, we've been evaluating parts of

|

||||

`nixpkgs` and ensuring we produce the same derivations. We managed to find and

|

||||

fix some correctness issues there.

|

||||

|

||||

Even though we don't want to focus too much on performance improvements

|

||||

until all features of Nix are properly understood and representable with our

|

||||

architecture, there's been some work on removing some obvious and low-risk

|

||||

performance bottlenecks. Expect a detailed blog post around that soon after

|

||||

this one!

|

||||

|

||||

## Tracing / O11Y Support

|

||||

Tvix got support for Tracing, and is able to emit spans in

|

||||

[OpenTelemetry][opentelemetry]-compatible format.

|

||||

|

||||

This means, if the necessary tooling is set up to collect such spans [^1], it's

|

||||

possible to see what's happening inside the different components of Tvix across

|

||||

process (and machine) boundaries.

|

||||

|

||||

Tvix now also propagates trace IDs via gRPC and HTTP requests [^2], and

|

||||

continues them if receiving such ones.

|

||||

|

||||

As an example, this allows us to get "callgraphs" on how a tvix-store operation

|

||||

is processed through a multi-node deployment, and find bottlenecks and places to

|

||||

optimize performance for.

|

||||

|

||||

Currently, this is compiled in by default, trying to send traces to an endpoint

|

||||

at `localhost` (as per the official [SDK defaults][otlp-sdk]). It can

|

||||

be disabled by building without the `otlp` feature, or running with the

|

||||

`--otlp=false` CLI flag.

|

||||

|

||||

This piggy-backs on the excellent [tracing][tracing-rs] crate, which we already

|

||||

use for structured logging, so while at it, we improved some log messages and

|

||||

fields to make it easier to filter for certain types of events.

|

||||

|

||||

We also added support for sending out [Tracy][tracy] traces, though these are

|

||||

disabled by default.

|

||||

|

||||

Additionally, some CLI entrypoints can now report progress to the user!

|

||||

For example, when we're fetching something during evaluation

|

||||

(via `builtins.fetchurl`), or uploading store path contents, we can report on

|

||||

this. See [here][asciinema-import] for an example.

|

||||

|

||||

We're still considering these outputs as early prototypes, and will refine them as

|

||||

we go.

|

||||

|

||||

## tvix-castore ingestion generalization

|

||||

We spent some time refactoring and generalizing tvix-castore importer code.

|

||||

|

||||

It's now generalized on a stream of "ingestion entries" produced in a certain

|

||||

order, and there's various producers of this stream (reading through the local

|

||||

filesystem, reading through a NAR, reading through a tarball, soon: traversing

|

||||

contents of a git repo, …).

|

||||

|

||||

This prevented a lot of code duplication for these various formats, and allows

|

||||

pulling out helper code for concurrent blob uploading.

|

||||

|

||||

## More tvix-[ca]store backends

|

||||

We added some more store backends to Tvix:

|

||||

|

||||

- There's a [redb][redb] `PathInfoService` and `DirectoryService`, which

|

||||

also replaced the previous `sled` default backend.

|

||||

- There's a [bigtable][bigtable] `PathInfoService` and `DirectoryService`

|

||||

backend.

|

||||

- The "simplefs" `BlobService` has been removed, as it can be expressed using

|

||||

the "objectstore" backend with a `file://` URI.

|

||||

- There's been some work on feature-flagging certain backends.

|

||||

|

||||

## Documentation reconcilation

|

||||

Various bits and pieces of documentation have previously been scattered

|

||||

throughout the Tvix codebase, which wasn't very accessible and quite confusing.

|

||||

|

||||

These have been consolidated into a mdbook (at `//tvix/docs`).

|

||||

|

||||

We plan to properly host these as a website, hopefully providing a better introduction

|

||||

and overview of Tvix, while adding more content over time.

|

||||

|

||||

## `nar-bridge` RIIR

|

||||

While the golang implementation of `nar-bridge` did serve us well for a while,

|

||||

it being the only remaining non-Rust part was a bit annoying.

|

||||

|

||||

Adding some features there meant they would not be accessible in the rest of

|

||||

Tvix - and the other way round.

|

||||

Also, we could not open data stores directly from there, but always had to start

|

||||

a separate `tvix-store daemon`.

|

||||

|

||||

The initial plans for the Rust rewrite were already made quite a while ago,

|

||||

but we finally managed to finish implementing the remaining bits. `nar-bridge`

|

||||

is now fully written in Rust, providing the same CLI experience features and

|

||||

store backends as the rest of Tvix.

|

||||

|

||||

## `crate2nix` and overall rust Nix improvements

|

||||

We landed some fixes in [crate2nix][crate2nix], the tool we're using to for

|

||||

per-crate incremental builds of Tvix.

|

||||

|

||||

It now supports the corner cases needed to build WASM - so now

|

||||

[Tvixbolt][tvixbolt] is built with it, too.

|

||||

|

||||

We also fixed some bugs in how test directories are prepared, which unlocked

|

||||

running some more tests for filesystem related builtins such as `readDir` in our test suite.

|

||||

|

||||

Additionally, there has been some general improvements around ensuring various

|

||||

combinations of Tvix feature flags build (now continuously checked by CI), and

|

||||

reducing the amount of unnecessary rebuilds, by filtering non-sourcecode files

|

||||

before building.

|

||||

|

||||

These should all improve DX while working on Tvix.

|

||||

|

||||

## Store Composition

|

||||

Another big missing feature that landed was Store Composition. We briefly spoke

|

||||

about the Tvix Store Model in the last update, but we didn't go into too much

|

||||

detail on how that'd work in case there's multiple potential sources for a store

|

||||

path or some more granular contents (which is pretty much always the case

|

||||

normally, think about using things from your local store OR then falling back to

|

||||

a remote place).

|

||||

|

||||

Nix has the default model of using `/nix/store` with a sqlite database for

|

||||

metadata as a local store, and one or multiple "subsituters" using the Nix HTTP

|

||||

Binary Cache protocol.

|

||||

|

||||

In Tvix, things need to be a bit more flexible:

|

||||

- You might be in a setting where you don't have a local `/nix/store` at all.

|

||||

- You might want to have a view of different substituters/binary caches for

|

||||

different users.

|

||||

- You might want to explicitly specify caches in between some of these layers,

|

||||

and control their config.

|

||||

|

||||

The idea in Tvix is that you'll be able to combine "hierarchies of stores" through

|

||||

runtime configuration to express all this.

|

||||

|

||||

It's currently behind a `xp-store-composition` feature flag, which adds the

|

||||

optional `--experimental-store-composition` CLI arg, pointing to a TOML file

|

||||

specifying the composition configuration. If set, this has priority over the old

|

||||

CLI args for the three (single) stores.

|

||||

|

||||

We're still not 100% sure how to best expose this functionality, in terms of the

|

||||

appropriate level of granularity, in a user-friendly format.

|

||||

|

||||

There's also some more combinators and refactors missing, but please let us

|

||||

know your thoughts!

|

||||

|

||||

## Contributors

|

||||

There's been a lot of progress, which would not have been possible without our

|

||||

contributors! Be it a small drive-by contributions, or large efforts, thank

|

||||

you all!

|

||||

|

||||

- Adam Joseph

|

||||

- Alice Carroll

|

||||

- Aspen Smith

|

||||

- Ben Webb

|

||||

- binarycat

|

||||

- Brian Olsen

|

||||

- Connor Brewster

|

||||

- Daniel Mendler

|

||||

- edef

|

||||

- Edwin Mackenzie-Owen

|

||||

- espes

|

||||

- Farid Zakaria

|

||||

- Florian Klink

|

||||

- Ilan Joselevich

|

||||

- Luke Granger-Brown

|

||||

- Markus Rudy

|

||||

- Matthew Tromp

|

||||

- Moritz Sanft

|

||||

- Padraic-O-Mhuiris

|

||||

- Peter Kolloch

|

||||

- Picnoir

|

||||

- Profpatsch

|

||||

- Ryan Lahfa

|

||||

- Simon Hauser

|

||||

- sinavir

|

||||

- sterni

|

||||

- Steven Allen

|

||||

- tcmal

|

||||

- toastal

|

||||

- Vincent Ambo

|

||||

- Yureka

|

||||

|

||||

---

|

||||

|

||||

That's it again, try out Tvix and hit us up on IRC or on our mailing list if you

|

||||

run into any snags, or have any questions.

|

||||

|

||||

|

||||

[^1]: Essentially, deploying a collecting agent on your machines, accepting

|

||||

these traces.

|

||||

[^2]: Using the `traceparent` header field from https://www.w3.org/TR/trace-context/#trace-context-http-headers-format

|

||||

[^3]: like `builtins.toFile` not adding files yet, or `inputSources` being missed initially, duh!)

|

||||

|

||||

[2024-02-tvix-update]: https://tvl.fyi/blog/tvix-update-february-24

|

||||

[opentelemetry]: https://opentelemetry.io/

|

||||

[otlp-sdk]: https://opentelemetry.io/docs/languages/sdk-configuration/otlp-exporter/

|

||||

[tracing-rs]: https://tracing.rs/

|

||||

[tracy]: https://github.com/wolfpld/tracy

|

||||

[asciinema-import]: https://asciinema.org/a/Fs4gKTFFpPGYVSna0xjTPGaNp

|

||||

[blog-rewriting-nix]: https://tvl.fyi/blog/rewriting-nix

|

||||

[crate2nix]: https://github.com/nix-community/crate2nix

|

||||

[redb]: https://github.com/cberner/redb

|

||||

[bigtable]: https://cloud.google.com/bigtable

|

||||

[tvixbolt]: https://bolt.tvix.dev/

|

||||

|

|

@ -1,42 +0,0 @@

|

|||

{ depot, ... }:

|

||||

|

||||

{

|

||||

config = {

|

||||

name = "TVL's blog";

|

||||

footer = depot.web.tvl.footer { };

|

||||

baseUrl = "https://tvl.fyi/blog";

|

||||

};

|

||||

|

||||

posts = builtins.sort (a: b: a.date > b.date) [

|

||||

{

|

||||

key = "rewriting-nix";

|

||||

title = "Tvix: We are rewriting Nix";

|

||||

date = 1638381387;

|

||||

content = ./rewriting-nix.md;

|

||||

author = "tazjin";

|

||||

}

|

||||

|

||||

{

|

||||

key = "tvix-status-september-22";

|

||||

title = "Tvix Status - September '22";

|

||||

date = 1662995534;

|

||||

content = ./tvix-status-202209.md;

|

||||

author = "tazjin";

|

||||

}

|

||||

|

||||

{

|

||||

key = "tvix-update-february-24";

|

||||

title = "Tvix Status - February '24";

|

||||

date = 1707472132;

|

||||

content = ./2024-02-tvix-update.md;

|

||||

author = "flokli";

|

||||

}

|

||||

{

|

||||

key = "tvix-update-august-24";

|

||||

title = "Tvix Status - August '24";

|

||||

date = 1723219370;

|

||||

content = ./2024-08-tvix-update.md;

|

||||

author = "flokli";

|

||||

}

|

||||

];

|

||||

}

|

||||

|

|

@ -1,90 +0,0 @@

|

|||

Evaluating the Nix programming language, used by the Nix package

|

||||

manager, is currently very slow. This becomes apparent in all projects

|

||||

written in Nix that are not just simple package definitions, for

|

||||

example:

|

||||

|

||||

* the NixOS module system

|

||||

* TVL projects like

|

||||

[`//nix/yants`](https://at.tvl.fyi/?q=%2F%2Fnix%2Fyants) and

|

||||

[`//web/bubblegum`](https://at.tvl.fyi/?q=%2F%2Fweb%2Fbubblegum).

|

||||

* the code that [generates build

|

||||

instructions](https://at.tvl.fyi/?q=%2F%2Fops%2Fpipelines) for TVL's

|

||||

[CI setup](https://tvl.fyi/builds)

|

||||

|

||||

Whichever project you pick, they all suffer from issues with the

|

||||

language implementation. At TVL, it takes us close to a minute to

|

||||

create the CI instructions for our monorepo at the moment - despite it

|

||||

being a plain Nix evaluation. Running our Nix-native build systems for

|

||||

[Go](https://code.tvl.fyi/about/nix/buildGo) and [Common

|

||||

Lisp](https://code.tvl.fyi/about/nix/buildLisp) takes much more time

|

||||

than we would like.

|

||||

|

||||

Some time last year a few of us got together and started investigating

|

||||

ways to modernise the current architecture of Nix and figure out how

|

||||

to improve the speed of some of the components. We created over [250

|

||||

commits](https://cl.tvl.fyi/q/topic:tvix) in our fork of the Nix 2.3

|

||||

codebase at the time, tried [performance

|

||||

experiments](https://cl.tvl.fyi/c/depot/+/1123/) aimed at improving

|

||||

the current evaluator and fought [gnarly

|

||||

bugs](https://cl.tvl.fyi/c/depot/+/1504).

|

||||

|

||||

After a while we realised that we were treading water: Some of our

|

||||

ideas are too architecturally divergent from Nix to be done on top of

|

||||

the existing codebase, and the memory model of Nix causes significant

|

||||

headaches when trying to do any kind of larger change.

|

||||

|

||||

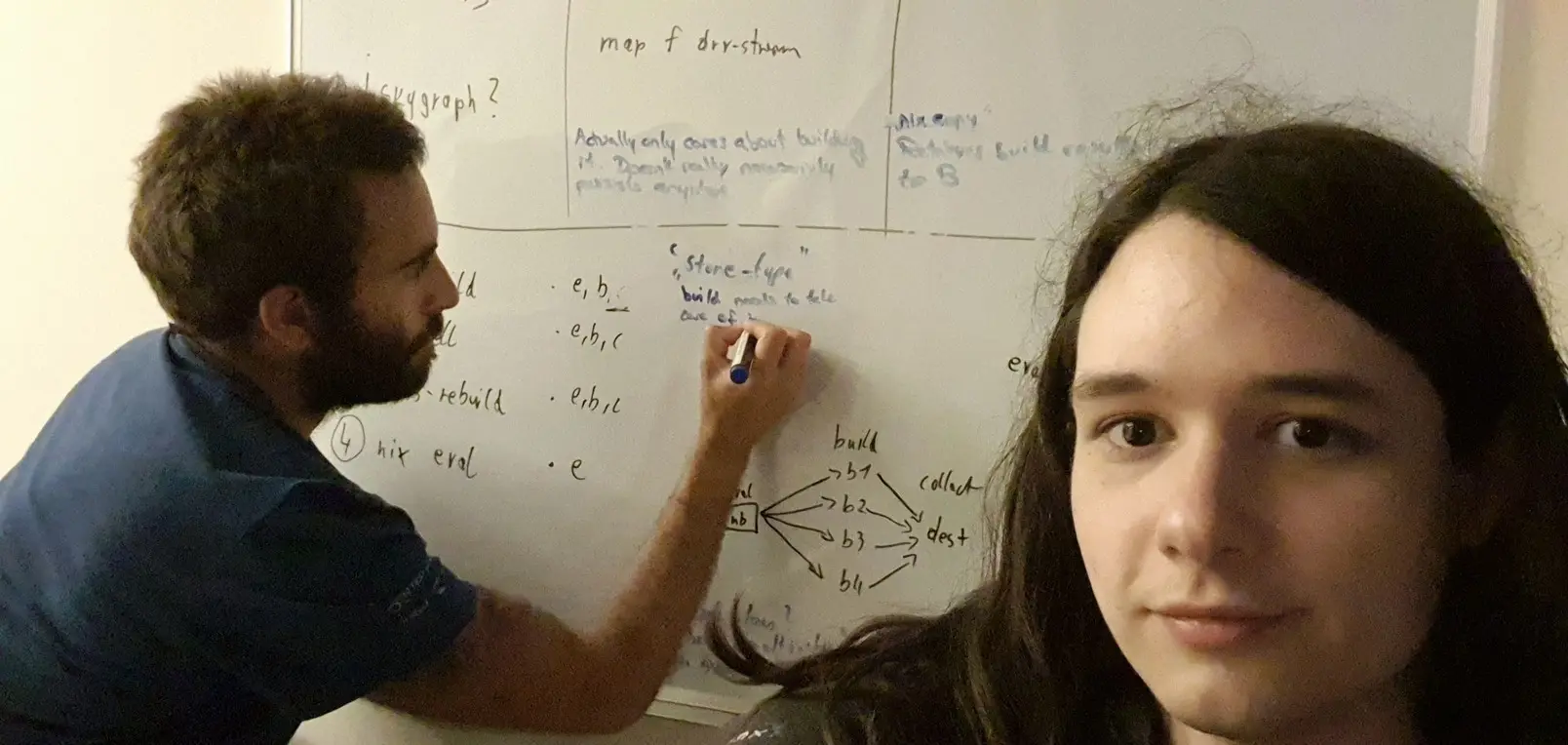

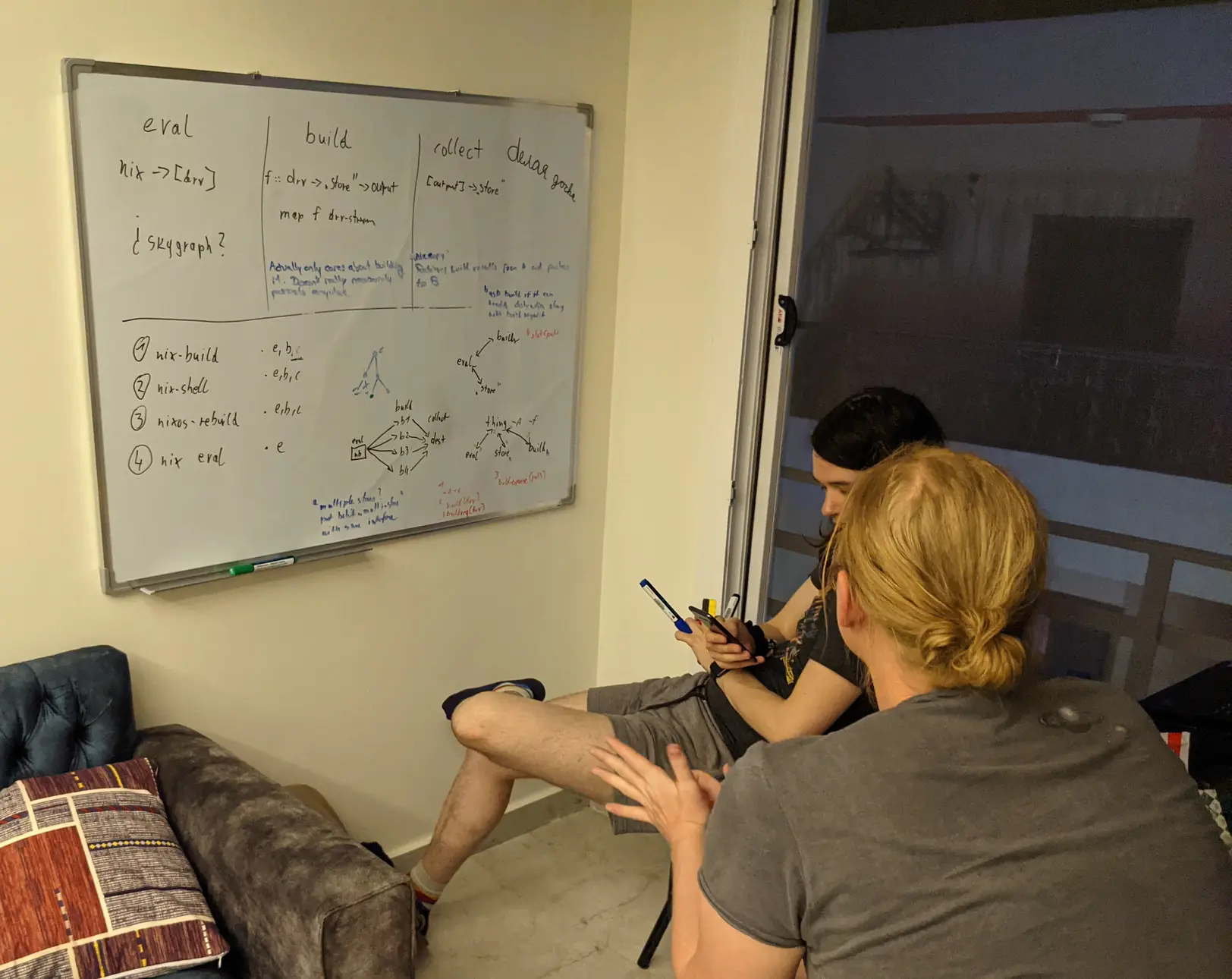

We needed an alternative approach and started brainstorming on a bent

|

||||

whiteboard in a small flat in Hurghada, Egypt.

|

||||

|

||||

|

||||

|

||||

Half a year later we are now ready to announce our new project:

|

||||

**Tvix**, a re-imagined Nix with full nixpkgs compatibility. Tvix is

|

||||

generously funded [by NLNet](https://nlnet.nl/project/Tvix/) (thanks!)

|

||||

and we are ready to start implementing it.

|

||||

|

||||

The [Tvix

|

||||

architecture](https://code.tvl.fyi/about/tvix/docs/components.md) is

|

||||

designed to be modular: It should be possible to write an evaluator

|

||||

that plugs in the Guile language (for compatibility with GNU Guix), to

|

||||

use arbitrary builders, and to replace the store implementation.

|

||||

|

||||

Tvix has these high-level goals:

|

||||

|

||||

* Creating an alternative implementation of Nix that is **fully

|

||||

compatible with nixpkgs**.

|

||||

|

||||

The package collection is an enormous effort with hundreds of

|

||||

thousands of commits, encoding expert knowledge about lots of

|

||||

different software and ways of building and managing it. It is a

|

||||

very valuable piece of software and we must be able to reuse it.

|

||||

|

||||

* More efficient Nix language evaluation, leading to greatly increased

|

||||

performance.

|

||||

|

||||

* No more strict separation of evaluation and build phases: Generating

|

||||

Nix data structures from build artefacts ("IFD") should be supported

|

||||

first-class and not incur significant performance cost.

|

||||

|

||||

* Well-defined interaction protocols for how the three different

|

||||

components (evaluator, builder, store) interact.

|

||||

|

||||

* A builder implementation using OCI instead of custom sandboxing

|

||||

code.

|

||||

|

||||

|

||||

|

||||

Tvix is not intended to *replace* Nix, instead we want to improve the

|

||||

ecosystem by offering an alternative, fast and reliable implementation

|

||||

for Nix features that are in use today.

|

||||

|

||||

As things ramp up we will be posting more information on this blog,

|

||||

for now you can keep an eye on

|

||||

[`//tvix`](https://code.tvl.fyi/tree/tvix) in the TVL monorepo

|

||||

and subscribe to [our feed](https://tvl.fyi/feed.atom).

|

||||

|

||||

Stay tuned!

|

||||

|

||||

<span style="font-size: small;">PS: TVL is international, but a lot of

|

||||

the development will take place in our office in Moscow. Say hi if

|

||||

you're around and interested!</span>

|

||||

|

|

@ -1,165 +0,0 @@

|

|||

We've now been working on our rewrite of Nix, [Tvix][], for over a

|

||||

year.

|

||||

|

||||

As you can imagine, this past year has been turbulent, to say the

|

||||

least, given the regions where many of us live. As a result we haven't

|

||||

had as much time to work on fun things (like open-source software

|

||||

projects!) as we'd like.

|

||||

|

||||

We've all been fortunate enough to continue making progress, but we

|

||||

just haven't had the bandwidth to communicate with you and keep you up

|

||||

to speed on what's going on. That's what this blog post is for.

|

||||

|

||||

## Nix language evaluator

|

||||

|

||||

The most significant progress in the past six months has been on our

|

||||

Nix language evaluator. To answer the most important question: yes,

|

||||

you can play with it right now – in [Tvixbolt][]!

|

||||

|

||||

We got the evaluator into its current state by first listing all the

|

||||

problems we were likely to encounter, then solving them independently,

|

||||

and finally assembling all those small-scale solutions into a coherent

|

||||

whole. As a result, we briefly had an impractically large private

|

||||

source tree, which we have since [integrated][] into our monorepo.

|

||||

|

||||

This process was much slower than we would have liked, due to code

|

||||

review bandwidth... which is to say, we're all volunteers. People have

|

||||

lives, bottlenecks happen.

|

||||

|

||||

Most of this code was either written or reviewed by [grfn][],

|

||||

[sterni][] and [tazjin][] (that's me!).

|

||||

|

||||

### How much of eval is working?

|

||||

|

||||

*Most of it*! You can enter most (but not *all*, sorry! Not yet,

|

||||

anyway.) Nix language expressions in [Tvixbolt][] and observe how they

|

||||

are evaluated.

|

||||

|

||||

There's a lot of interesting stuff going on under the hood, such as:

|

||||

|

||||

* The Tvix compiler can emit warnings and errors without failing

|

||||

early, and retains as much source information as possible. This will

|

||||